Baldur's Gate III - Larian Studios

I worked as a technical sound designer for Baldur's Gate III, alongside the fantastic audio team at Larian Studios. As a technical sound designer on the project, I wrote a lot of Python scripts for Wwise to help smooth out the workflow of the asset generation pipeline. I also helped out with technical implementation both in Wwise and in Larian's in-house game engine, and worked on sound design and sound systemics.

Impacter Wwise Plugin - Audiokinetic

I worked on the C++ implementation for the Impacter Wwise plugin. This was an extremely fun project to work on, translating Python audio analysis and synthesis code into C++ using the Eigen library, and designing the simple plugin UI that exposes physics-inspired parameters to the user, allowing them to cross-synthesize impact sounds and simulate shrinking or enlarging objects, as well as altering the impact velocity and position. I also implemented a destructible demo environment in the Unreal engine in which all of the sound was implemented using Impacter, and the plugin parameters were driven by the game physics.

Wwise Unreal Spatial Audio Features - Audiokinetic

I worked a lot on the Wwise Unreal integration at Audiokinetic. This involved the 'componentization' of Spatial Audio features such as Rooms, Portals, Geometry and Acoustic Textures. In other words - creating individual components for these features such that they can be used in custom Blueprints, as well as through Spatial Audio Volumes. Building upon this componentization, I added functionality to the Unreal integration for estimating the reverb Decay and HF Damping values for a reverb effect automatically, when Reverb and Geometry Components are used, by analysing the geometry to which they are attached. I also updated the way that acoustic textures are assigned to surfaces on Spatial Audio Volume objects, as well as their display in the viewport.

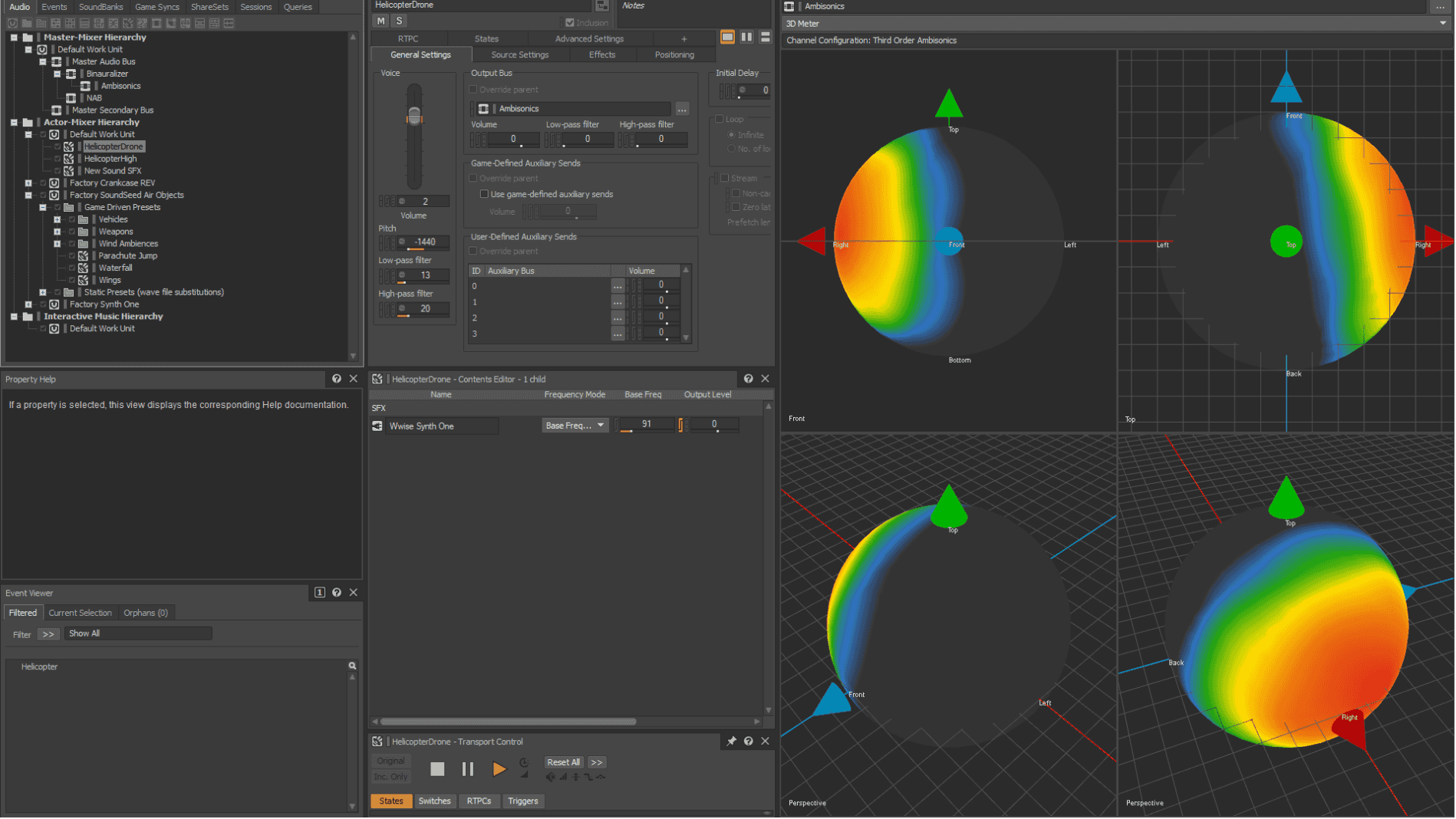

Ambisonics 3D Meter - Audiokinetic